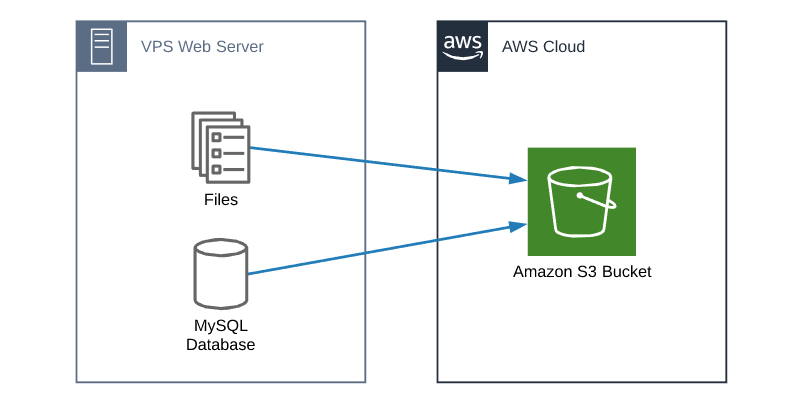

How I backup my VPS servers to AWS S3 bucket

Lately I’ve been asked or seen questions regarding how to backup a WordPress site or VPS server. I know many use WordPress plugins, though I’m not a fan of this process.

My answer has always been that I use a script that backups up my website files and databases to an AWS S3 bucket. While I’ve shared the scripts with a few people that have asked, I figured I’d make a post and share the information with everyone.

In this post I’ll share my setup on how I backup all the VPS servers I manage to Amazon S3 buckets.

Prerequisites

- Linux based VPS server (I use CentOS and Ubuntu)

- Both zstd

pigzand gzip installed on your server - AWS CLI installed and configured on your server

How to Backup Your VPS server to Amazon S3

- The first step would be to log into your AWS console and create two new S3 buckets (example: mySQLbackups & myFilebackups) in the region of your choice.

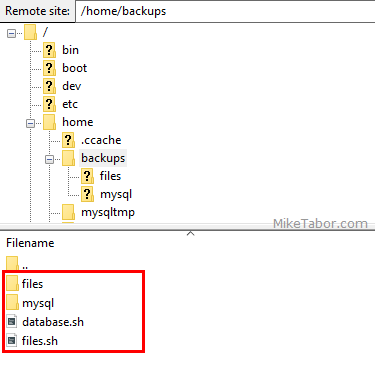

Note: This will be where your backups will live, so I suggest creating the S3 buckets in an AWS region away from your actual VPS server. - Now SSH VPS server create a couple new folders and files. Under the HOME directory I create a backups folder followed by a files and mysql folder under that. Finally under the backups folder I create a database.sh and files.sh files as shown below:

- Next make both the database.sh and files.sh files executable via the SSH terminal:

chmod +x filename.sh - Open the database.sh file and copy and paste the following code:

#!/bin/bash ################################################ # # Backup all MySQL databases in separate files and compress each file. # NOTES: # - MySQL and ZSTD must be installed on the system # - Requires write permission in the destination folder # - Excludes MySQL admin tables ('mysql',information_schema','performance_schema') # ################################################ ##### VARIABLES # MySQL User USER='root' # MySQL Password PASSWORD='MySQL_ROOT_PASSWORD' # Backup Directory - WITH TAILING SLASH IF PATH OTHER THEN '.'! OUTPUT="/home/backups/mysql" BUCKET="NAME_OF_S3_BUCKET" ##### EXECUTE THE DB BACKUP TIMESTAMP=`date +%Y%m%d_%H`; OUTPUTDEST=$OUTPUT; echo "Starting MySQL Backup"; echo `date`; databases=`mysql --user=$USER --password=$PASSWORD -e "SHOW DATABASES;" | tr -d "| " | grep -v Database` for db in $databases; do if [[ "$db" != "information_schema" ]] && [[ "$db" != _* ]] && [[ "$db" != "mysql" ]] && [[ "$db" != "performance_schema" ]] ; then echo "Dumping database: $db" mysqldump --single-transaction --routines --triggers --user=$USER --password=$PASSWORD --databases $db > $OUTPUTDEST/dbbackup-$TIMESTAMP-$db.sql zstd --rm -q $OUTPUTDEST/dbbackup-$TIMESTAMP-$db.sql fi done aws --only-show-errors s3 sync $OUTPUTDEST s3://$BUCKET/`date +%Y`/`date +%m`/`date +%d`/ rm -rf /home/backups/mysql/dbbackup-* echo "Finished MySQL Backup"; echo `date`;Making sure to edit line 16 with your VPS MySQL root password and line 19 with the name of your AWS S3 Bucket.

- Save and close the database.sh file.

- Open the files.sh file and copy and paste the following code:

################################################ # # Backup all files in the nginx home directory. # NOTES: # - gzip must be installed on the system # ################################################ ##### VARIABLES OUTPUT="/home/backups/files" ##### EXECUTE THE FILES BACKUP TIMESTAMP=`date +%Y%m%d_%H`; OUTPUTDEST=$OUTPUT; echo "Starting Files Backup"; cd /home/nginx/domains/ tar -czf $OUTPUTDEST/filesbackup-SERVER_HOST_NAME-$TIMESTAMP.tar * aws --only-show-errors s3 cp $OUTPUTDEST/filesbackup-SERVER_HOST_NAME-$TIMESTAMP.tar s3://AWS_BUCKET_NAME/ rm -f $OUTPUTDEST/filesbackup-* echo "Finished Files Backup"; echo `date`;This time edit line 17 & 18 replacing SERVER_HOST_NAME with the name of your VPS server. Also in line 18 be sure to replace AWS_BUCKET_NAME with the name of your S3 bucket.

- Save and close the files.sh file.

- Next open crontab by typing:

crontab -e - Add the following to lines to the end of the crontab:

0 0 * * * /home/backups/database.sh 0 1 * * * /home/backups/files.shThis will run the database backup script every night at midnight and the files backup script every night at 1am.

That is it. Now your VPS server will automatically backup to your AWS S3 buckets each night.

Fine tuning the backup job and S3 storage

You can of course change when and how often the backups kick off by simply changing the scheduled crontab entry. For some of my servers I have them just run daily, other servers might run the scripts 2-3 times a day.

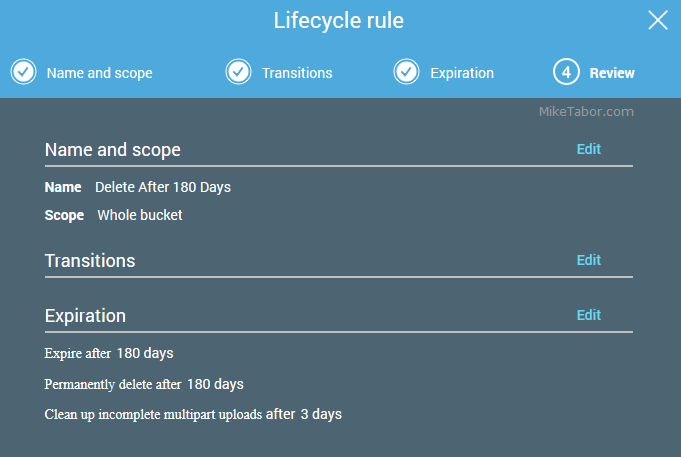

One last thing I would recommend is to create an AWS S3 Lifecycle Policy. If for nothing else to manage how long the backups are stored in S3.

Though you can also use the policy to transition the backups to different S3 storage classes. Such as moving it from Standard to Glacier after X number of days. You could also easily enable S3 Replication if you wanted so that you’d have multiple backups in different AWS regions.

There are tons of options within AWS on what you could do with the backups. All depends on how important the backups are and how much you want to spend.

AWS IAM Backup Account Policy

When you are configuring AWS CLI on your VPS server I would suggest configuring it to use a new IAM user that will only be used for the backup jobs (so NOT your AWS Root account).

Below is the policy I have attached to my VPStoS3Backup IAM account:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::mySQLbackups",

"arn:aws:s3:::mySQLbackups/*",

"arn:aws:s3:::myFilebackups",

"arn:aws:s3:::myFilebackups/*"

]

},

{

"Effect": "Deny",

"NotAction": "s3:*",

"NotResource": [

"arn:aws:s3:::mySQLbackups",

"arn:aws:s3:::mySQLbackups/*",

"arn:aws:s3:::myFilebackups",

"arn:aws:s3:::myFilebackups/*"

]

},

{

"Effect": "Deny",

"Action": [

"s3:DeleteBucket",

"s3:DeleteBucketPolicy",

"s3:DeleteBucketWebsite",

"s3:DeleteObject",

"s3:DeleteObjectVersion"

],

"Resource": [

"arn:aws:s3:::*"

]

}

]

}

Be sure to replace mySQLbackups and myFilebackups with the names of your AWS S3 buckets you are using to store the backups in.

The above policy restricts the IAM account to read and write in ONLY the buckets mentioned.

After the VFEmail hack, I then added the last part of the policy, which DENIES delete abilities from the account. So if the IAM account is ever compromised, the hacker wouldn’t be able to delete all my backups.

Additional notes

I have also placed the above backup scripts in a Github repository here.

For a simple WordPress plug-in solution I would suggest checking out Duplicator Pro as it supports AWS S3, Dropbox, Google Drive, MS OneDrive, and more.

If you are looking for a new hosting provider I would highly suggest checking out: UpCloud, Linode, or Vultr. I use all three for various projects and have been very happy with them all.

What is your backup process?

So that is my setup on how I backup VPS servers to AWS S3.

What are you using to backup your servers? Where are you storing them, locally or off-site?

If you have any suggestions on how I can improve my setup please let me know!

hi

-$db looks like a typo in the files.sh:

tar -czf $OUTPUTDEST/filesbackup-SERVER_HOST_NAME-$TIMESTAMP-$db.tar *

You are correct. It’s an empty variable so doesn’t do any harm, but certainly not needed.

I’ve updated the post and the Github repo. Thank you for the heads up!

-Michael

Is there way to exclude some of the directories in the files backup?

cd /home/nginx/domains/

There is one or 2 domains that I don’t want to backup.