Power off an unresponsive VM using ESXTOP

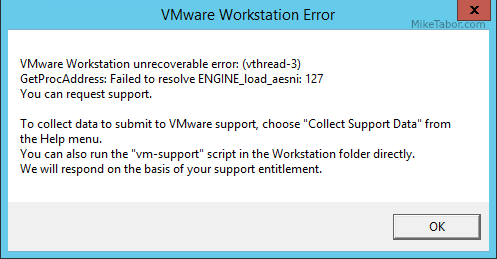

Just recently we have some hardware issues in our primary datacenter and during that time had a few VM’s that became unresponsive and needed to get them back online. The VM’s had stopped responding to the normal vSphere commands to reboot, shutdown or even restart. I didn’t want to power cycle the entire ESXi host and instead just power off an unresponsive VM.

Here is a quick and easy way to do just that using ESXTOP.

How to kill an unresponsive VM using ESXTOP

- SSH into the host that the virtual machine is currently running on using PuTTy or your choice of client.

- Type: esxtop to start ESXTOP.

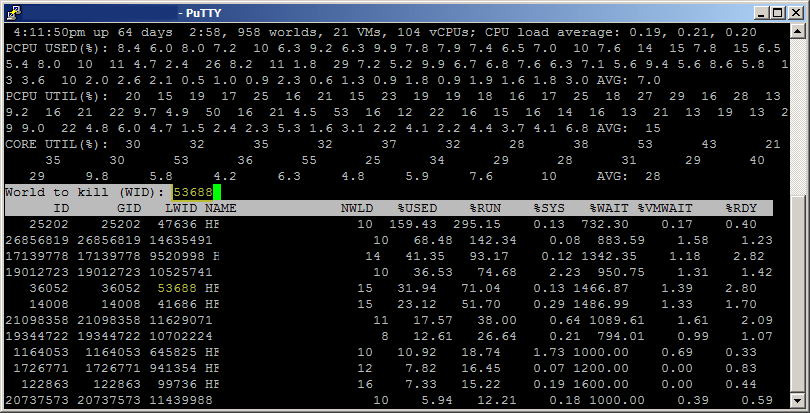

- Press c to enter the CPU view of ESXTOP.

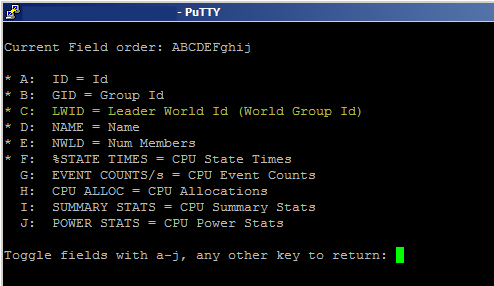

Note: It may be helpful to press shift and V so that only VM’s are shown. - Press f to change what display fields are shown and press c to show the LWID field, then press ENTER to go back to the CPU view.

- Finally, press k to open the “kill” prompt and enter the LWID of the VM you would like to power off and press ENTER.

At this point the VM should be powered off. Keep in mind this will perform a hard power off, similar to yanking the power from a physical box so you really only want to do this if the VM is not responding to any other commands in order to gracefully power it off.

How to kill a VM using vSphere CLI

You can get the same result as above, powering off a VM, and not needing to use ESXTOP at all.

- SSH into the host where the unresponsive VM is located and type the following:

vim-cmd vmsvc/getallvms - Take note of world ID of the VM and then use the following command to issue a shutdown by typing, making sure to replace (vmid) with the world ID:

vim-cmd vmsvc/power.off (vmid) - If you find the VM still won’t power off you can kill the PID for the VM. Use lsof to find the pid that has the vmx (config file) open and then use kill to terminate it.

lsof /vmfs/volumes/datastore/vmname/vmname.vmx kill -9 (pid)

Thanks goes to /u/Acaila on Reddit for pointing out this other method!

This is a nice post, I thought I would add that Ghost PIDs & WIDs exist. If you run either of the commands listed in this post and the result is “PID or WID not found.” Double check the PID/WID and if it is correct, a reboot of the ESXi host is the only fix.

We only encounter this in our Horizon View Cluster and it happens for customers on ESXi 5.x and ESXi 6.x running View 5.x or 6.x.