VMware ESXi 5 home lab upgrade

In the past I’ve been using a single server to run my VMware ESXi home lab. it was slow, old, big and loud. I’ve been meaning to upgrade my home lab for a while and just never got around to it, until now! When looking for new hardware I wanted the servers to be as compact as possible, quiet, and low energy usage as possible while still having some horsepower.

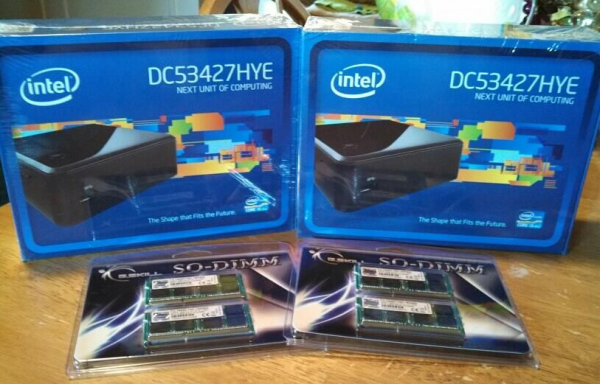

I debated on going the whitebox solution as I build all my own desktops anyway, I also looked at several other solutions such as the HP ML310e server, Shuttle XH61V barebones machine, but ultimately decided to go with two Intel NUC i5’s.

Intel NUC i5 DC53427HYE Features:

- Uses very little energy

- Completely quiet

- Supports up to 16GB RAM

- A dual core CPU that scores nearly 3,600 in PassMark benchmarking

- Includes vPro which allows me to easily run both NUC’s headless

- Extremely small, nearly 4 1/2″ inch square footprint

I loaded both of my NUC i5’s ESXi hosts with 16GB G.SKILL DDR3 RAM, and a SanDisk Cruzer Fit 8GB flash drive to install ESXi 5.0 onto – they are smaller than a thumbnail and provide more than enough space to install ESXi.

The only “downsides” I’ve found with the Intel NUC are:

- The NUC doesn’t come with a power cord, so you’ll have to buy a clover leaf style cord if you don’t already have any.

- Only one NIC, but really for home use this isn’t a terribly huge deal.

- Speaking of the NIC, the Intel 82579v NIC on the NUC isn’t on the standard driver list. This is easily fixed though by downloading the correct drivers (net-e1000e-2.3.2.x86_64.vib)(mirror) and injecting it into the ESXi ISO using ESXi-Customizer.

Once I had the correct NIC drivers added to the ESXi ISO, I simply followed the same steps as previously written in my Install ESXi 5.1 from a USB Flash Drive post, then plugged the USB drives into the NUC’s and performed the install from my home computer using the remote connect thanks to vPro!

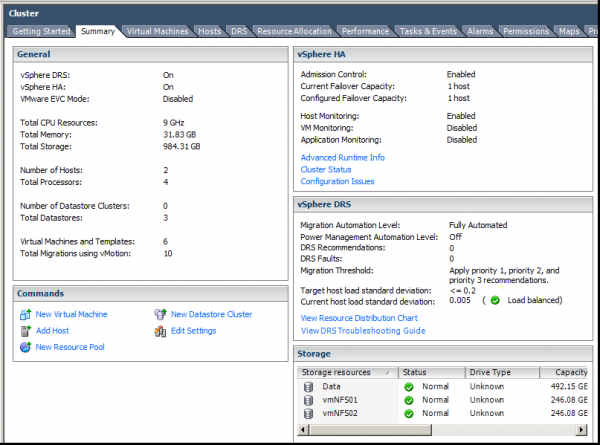

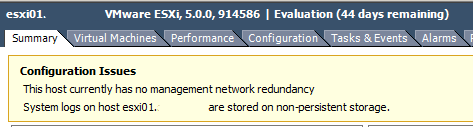

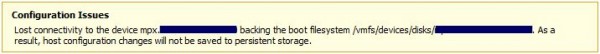

I’ve been running this setup now for several weeks and everything seems to be running perfectly and vSphere is only reporting two minor warning messages:

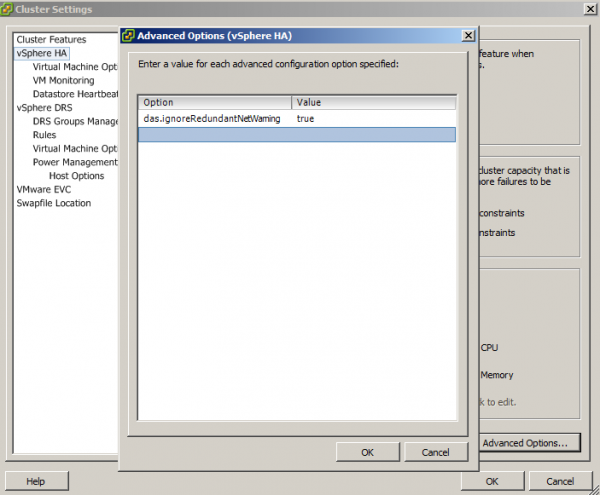

The first warning message “This host currently has no management network redundancy.” can be silenced by setting das.ignoreRedundantNetWarning to true in the HA Advanced Options:

- Open the vSphere Client and right click on the cluster and click on Edit Settings.

- Select vSphere HA and click on Advanced Options.

- In the Options column type: das.ignoreRedundantNetWarning

- In the Value column type: true

- Click OK.

- Finally, right click on each host and click on “Reconfigure for vSphere HA”.

Second warning message: Since ESXi 5 is installed on the USB thumb drive, I am given the second warning message “System logs on host are stored on non-persistent storage.“.

I have since setup my Synology DS412+ NAS to also host a syslog server and then pointed each host to the Synology Syslog server. I will have a future post about how to enable the syslog server on the Synology and how to make ESXi send logs to the syslog server.

All in all I’m very pleased with the upgrade. The new NUC i5’s are having no trouble running the virtual machines and I believe I can load them up with several more VM’s with no issues. Having two hosts now also opens up more things I can play with while at home. I’m now using MUCH less power than before while being completely silent and out of sight with their tiny footprint.

For my next upgrade, I’d really like to replace my Synoloy DS412+ with a DS1813+ so I can keep my RAID 10 while adding a hot spare and have room to add SSD cache as well as a single SSD drive for VM’s that use high IOPs.

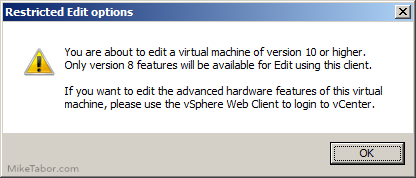

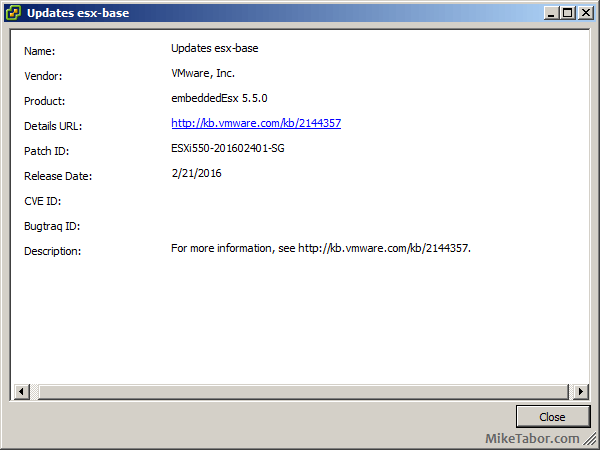

UPDATE: My Intel NUC home lab is now running ESXi 5.5 without any issue. I completed the upgrade via command line which is very quick and easy.

UPDATE 2: One of my SanDisk Cruzer Fit’s have failed and I have since added a third NUC to my ESXi home lab and doing so I’ve replaced all of the Cruzer Fit’s with Sandisk Cruzer Blades. They are not as small as the Fit’s but still very small and have been working without any problems so far.

I’m considering doing a similar setup. I was thinking about the i3 instead of the i5 to save a little money. Why did you decide to go with the i5 instead of the i3 nuc?

I choose the i5 because of the slightly better benchmark performance over the i3 and more so because the i5 has vPro support. With this I don’t have to connect a keyboard/mouse/monitor to the machine when I want to access the machine directly.

Hi there, been looking at this any ideas on a compatible additional NIC card?

I have not tried using any additional network adapters at the moment nor know of any that will work “without a doubt”.

Do you use local storage or do you run it with NFS only?

Dominik,

I currently use NFS to the DS412+ and so far things have been performing nicely. Though I’d like to add a mSATA SSD drive to each of the NUC’s so that I can also play with vFlash.

I have an Intel Board and use vPro for remote access to it, When I tried upgrade to ESXi5.5 it “Pink Screens” (only if accessing via vPro). Even when ESXi 5.5 is up and running, if I remote in using vPro it “Pink Screens” the system. Have you tested 5.5 as I really want to use vPro for remote access but cant now, Runs fine with ESXi 5.1

What model board are you using?

Both my Intel NUC’s are currently running ESXi 5.5 – I upgraded from ESXi 5.0 to 5.5 via command line and both have been running 5.5 without any issues.

Board = Intel DQ77MK with an i7 2600 (non k) processor.

ESXi 5.5 runs fine. The problem I have is using the vPRO Remote Console to access the PC using VNC Viewer Plus. Can you connect to yours using it?

Yes I’m able to use vPro with no issues. However I’m not using VNC Viewer Plus but instead using Real VNC (free edition) along with the Intel vPro software.

You might want to try that method. Also there appears to be a new BIOS update released just last month for the DQ77MK motherboard that might not hurt to update.

Bios Update: Yeap, was the first think I did.

RealVNC (Free Edition): Ah ha so you are using the Free Method which….from what I read…… will work for most remote purposes but you will lose some functionality like IDE redirect, encryption, and the ability to power the machine on and off (which I like)

Can I confirm you are using this method as noted here (I will then test using mine)

http://www.howtogeek.com/56538/

I don’t want to hog up this thread. Not sure if there is a method to PM you direct as I am very interested in getting this working.

That looks to be exactly how I use vPro in my lab. Being a home lab I wasn’t overly concerned about the features of the paid version. Plus it’s free. :) Try that guide and let me know how it works for you as I’m interested to see if it performs any better for you. For me it works perfectly.

Just finished testing.

It is still Pink Screens the second I connect using vPro KVM (using your method). A shame and will now return to ESXi 5.1 as I suspect it has something to do the CPU instruction.(says I who really hasn’t a clue). KVM access is critical to my needs and 5.1 works fine. Thanks a million for your replies. I now need to find a source for troubleshooting this. Not sure whether its I7 2600 or Motherboard related. Esxi 5.5 just doesn’t like something. (suggestions welcome). Boo hoo!

You’ve stumped me. I’m not a vPro guru but I can’t think of anything right off hand that would cause ESXi to purple screen when using vPro. What is the error code on the purple screen? Have you gone down that path yet?

If you do find a fix, I’d be interested in hearing what it might be!

I get a “Exception in World 32804 error”

You know VMware KB its mostly useless. Here is a capture if interested?

https://www.dropbox.com/sh/4eau2ieb5pim2qg/FDH6Fu0nhl#lh:null-ESXi5.5_PSOD.mp4

If ever I find the cause will let you know but have exhausted most avenues at this stage so will stick with 5.1 for now.

Love what you’ve done here. I am about to copy you.

Great! Let me know your thoughts once you’ve built your new lab.

Great posting!

I’ve got a similar setup as you (DS412+; using NFS, iSCSI seemed slow) but with a M-Atx i5 quad core (16 Gb ram) and I’m looking to replace it with the intel NUC because of the low power consumption.

Adding a driver to the ISO sounds quite doable.

Do you have a good howto reference to add this intel driver?

Hope to hear from you,

Eric

Eric my home lab is currently running ESXi 5.5 without any issues. The upgrade from 5.0 to 5.5 was very easy via command line.

I’m sorry I haven’t replied to your response yet.

Installed ESXi 5.5 on two Intel® NUC Kit D54250WYK with 16 gigs each and one with a 120 gb ssd.

Modified that same one into a fanless casing of TranquilPC.

It’s a great low power and silent server. Awesome!

Only downside is that it hasn’t got vPro which I was expecting.

Thanks for this great site!

How many VMs can you run on each host without seeing any performance degradation?

I haven’t tried to really load the NUC’s but to give you an idea. I currently have my SQL VM, Domain Controller VM, Plex media server VM, and Minecraft server VM on one host. Host summary shows I’m using 309MHz and 6GB of RAM as I type this.

The other host has Virtual Center VM and a Win7 box I use to download junk to and that host is currently using 650MHz and 7GB of RAM.

I think with most environments, memory is the most used resource but even still I feel I have plenty of room to grow if I feel the need to spin up more VM’s. I’m sure I could fit many Dam Small Linux VM’s on there if i Iwanted.

Thx, that good to know. I just purchased a Shuttle SH87R6 with:

Core i7 Haswell 3.4Ghz

32GB RAM

120GB SSD – For vCenter

2TB 7200rpm – for local Datastore

I plan on using this as my first host of 2 hosts. Eventually when i get more funds I will buy a the second host. Then I will add a NAS using iSCSI and configure vMotion, HA and DRS. Right now I just need a simple VMWare lab environment to test some Microsoft solutions (SCCM, Server 2012, etc…)

I hope this setup will be sufficient for now:)

Thx again for the response.

That machine should have no problems operating as a small lab for you. My main PC runs an i7-3770 cpu with 32GB RAM and I used to run my lab within VMware Workstation before I got my two NUC units.

Really?..Wow! That makes me very excited since this is the first time i’m setting up a VMWare home lab. Did you set up any special VLANs for Storage, vMotion traffic?

Thx

Hi Michael,

Thanks for sharing this info! Very helpful. 2 questions:

1. I assume you don’t have any troubles testing High Availability or Fault Tolerance w/ single NICs in this setup? If someone was studying for their VCP, is there anything we wouldn’t be able to practice w/ this setup?

2. Now, that you’ve probably been running this setup for a while, what quirks or road blocks have you run into that you wouldn’t mind sharing?

vonsyd0w – I have no problems using all the features such as HA and FT, for as simple as these “hosts” are they perform very well for my needs and I believe would make a very nice setup for someone to use to practice for their VCP. It would be nice to have hosts that had multiple NICs and more memory. More NICs to setup the hosts more “real world” with separate networks for VM traffic, management, iscsi, etc and more memory as I’d like to eventually setup a small VMware View lab too. If you have the room for a larger server like the HP ML310e, or Dell C1100 or Dell 6100 I would probably go that route just for the added memory and ability for multiple NICs. I unfortunately don’t have the room right now.

That said, these machines are incredibly small, silent, have a high WAF, and are handling with ease the load I’m putting on them. The only quirk with the NUC’s is what I’ve already mentioned with the NIC drivers but is easily fixed. I’ve seen Intel release another version or two of the NUC since I’ve made my purchase but the model I’m running it still the only version that also offers vPro which is nice to manage the hosts should I need to.

I just setup a two NUC (D54250WYK) home lab with ESXI 5.5. Each NUC has 16Gb ram and a 120Gb mSATA SSD drive. Trying to figure out what to economically use for a NAS device now. I ordered an HP v1910-24G switch (overkill for home I know but it was only a couple $ more than the Cisco SG300-10)

One question is how you are handling vCenter? Did you use the appliance and if so, that in itself will take up one whole NUC right? Did you put vCenter on a separate box? Not use vCenter at all?

To start playing I have the vCenter appliance running under Fusion on my Macbook Pro. Not sure how that will affect things when I put it so sleep and shut the laptop off tho. Will that mess anything up?

edisoninfo,

For a NAS, I can’t recommend Synology enough. I currently have the DS412+ and love it. Been considering for a while now upgrading to their DS1813+ just to add more drives. The HP 1910 switch is a great choice and I too would have gone with it if it didn’t have a fan, but instead went with an HP 1810-24g v2 switch.

As for vCenter I have it running as a VM using Windows 2008 R2. During the install I gave the VM 8GB RAM and after it was complete scaled the memory back to 4GB. I could probably scale it back a bit more if I wanted but I’m not running into memory contention at the moment. Regarding vCenter, your VM’s can and will still run if vCenter is powered off or ‘asleep’ but you’ll obviously loose things like HA, DRS and other functionality. I would not recommend running vCenter in this manner. Best bet is to either run as a stand alone VM or use the appliance.

Hope this helps and enjoy your new lab!

Hi Michael,

I’ll buy your DS412+ (grin) then you can get the DS1813+ ! I am looking at the TS-269L unit. It only has two drives but for the lab, that is fine. I have a 5 bay Drobo FS but it is older and doesn’t seem to do NFS or iSCSI are far as I can tell.

I understand the “no fan” desire for the 1810 switch but the 1910 has a little layer 3 routing. I guess for single nic NUC’s, that is not all that useful tho.

For vCenter, I can move the appliance from my Mac to the NUC but can I run it with only 1 or 2 cores and 4Gb ram? I hate to suck up the entire NUC just for vCenter.

– Gary

Gary,

I have a feeling selling the DS412+ won’t be the hard part, it’s getting the funds for the DS1813+ that tends to be more of an issue. ;)

Just FYI – Synology has a nice two bay NAS, the DS713+, that’s VMware compliant which I don’t believe is the case for the QNAP. I know I’m biased but I like Synology!

I don’t see any reason why you couldn’t run the VSA with one core. All of my VM’s with the exception of my PLEX media server runs with one core.

I ordered a Synology DS214+ earlier today. Hope I didn’t mess up. It didn’t really mention vmware but it did say it had iscsi and was in my price range. It already shipped so I’ll have to wait and see if it works. I’ll let you know…

Update: Called Synology, the DS214+ support iSCSI but not virtualization. So I ordered the DS713+ and will have to return the DS214+ sigh….

Well, so close. I have the DS713+ configured and created an ISCSI LUN, ESXi 5.5 sees the LUN in the Dynamic Scan screen of the iSCSI software adapter but it will not show up in the “Add Datastore” screen. I ssh’d into the host and can ping the SAN. Not sure what else to try next. Synology support just said “gee it should work”. I am not a stranger to adding iSCSI datastores, but this one has me baffled.

I’m sending you an email now, might be able to provide better help than in the commends.

Hi,

I am new to VMware ESXi server, I try to install VMware-VMvisor-Installer-5.5.0.update01-1623387.x86_64 in intel DZ75ML-45k, Intel(R) 82579V Gigabit Network Connection, Every time it show the error “Physically Network Adapter Not Connected……’ and i try ESXi-Customizer too with many variant of .vib file, .tgz and offline_bundle But i can’t install the ESXi server, Pl anyone help me.. Thanks in advance…..

Justin,

I can confirm that the .vib driver file in the (Mirror) link, linked above does work for the Intel 82579V network adatper. Give that link a try instead and then use ESXi Customizer to inject the driver into the ISO file and you will be good to go.

I’ve used this same process from ESXi 5.0 – the most current ESXi 5.5 Update 2 version without any problems.

I just setup a NUC (D54250WYK) too and see a strange behaviour in the network – when I’m trying to ping the NUC (ESXi 5.5 installed) I receive a response with two replys or sometimes no response – means it’s not a reliable IP-connection. I created a custom.iso with the suggested drivers as described. Any suggestion, what I can do?

RalfM,

The first thing I’d try would be to re-create a customized ISO image using again the NIC driver linked above and try re-installing. This isn’t the same model I use myself but others have commented here using the same model you’re using without any issues.

Hope this helps and keep us updated!

-Michael

Hi Michael,

thanks for your suggestion. I tested a different way successful:

– used the older VMware-VMvisor-Installer-5.1.0.update01-1065491.x86_64.iso

– created custom iso (binding net-e1000e-2.3.2.x86_64.vib and sata-xahci-1.10-1.x86_64.vib) with esxi-customizer

– installation and configuration on D54250WYK (if an installation with a bootable usb-stick is used, it can occur that the nic has to be activated manually in the config area of esxi-GUI)

– first network check with reliable ping replies done (strike!!!)

– then I done Mike’s (https://miketabor.com/easy-esxi-5-5-upgrade-via-command-line/ ) online update

Everything is fine now.

It seems that the integration of the two vib’s into the newer installation process of an ESXi-5.5*.iso creates an error in the nic-driver landscape inside the esxi-core. If I have time I’ll try again with your driver sources – but I’m sure that I used the same from the virten.net sources.

-Ralf

Hi Michael,

Great article. Thanks for all your time and effort.

Just put together a Home Lab as well. 2 Hosts, SG-300 L-3 Switch, Synology 1513+.

My question is regarding the Synology configuration so it can be used by both Hosts:

I created a bond with the first two NICs and tested ping between the ESXi1 and the IP of Bond 1, additionally was able to establish an iSCSI connection to LUN 1, LUN 2… Looking good so far

My question is regarding the connection to ESXi2 :

Can I connect ESXi2 to the same IP (Bond 1)? Or Do I need to create a second bond (NIC 3 and 4)

If I need to create the second bond, can the new IP be on the same VLAN than bond 1 or do I need a new VLAN.

As always, appreciate your help

cmayo,

Thanks for the comment and the kind words. To answer your question you do not need a second bond to connect your additional ESXi host(s). However, you DO need to make a change to the iSCSI target if you haven’t already and that’s to enable “Allow multiple sessions from one or more iSCSI initiators”.

Sounds like you’ve got a good looking home lab!

-Michael

Thanks Michael. I found the settings under the Advanced Tab.

Appreciate your help

Cesar

Hi !

Great articles ! that help me a lot i have a problem i have intel NUC also but when i install esxi i did not see all my ssd disks.

It displays correctly the memory but not the second msata ssd.

My second question is about the use of a external hard drive do you think it is possible ?

Thks !

Donald,

You will need to load the SATA AHCI drivers in order for the SSD to be detected. I’ve posted about this process in a previous post here: https://miketabor.com/esxi-5-fails-install-intel-nuc-error-no-network-adapters/

-Michael

Hi! Thanks i did it it seems that i have another problem cause even with a windows installation i see just one ssd disks thanks.

May be a physicall problem but it is really strange that RAM work on another intel nuc, or do you think the nuc doesn’t really support two mssd ?

Donald,

I mis-read your first comment and didn’t pick up on you trying to use two mSATA SSD’s. The NUC only supports one mSATA drive the other mini-PCIe is to be used for a wireless adapter.

There are NUC models that are taller that add support for a 2.5″ SSD or laptop drive as well if you want to use two drives. However those models do not include vPRO nor does the CPU’s on other models benchmark as well as the model I run.

-Michael

ok Thanks. I see, ok i plan to use an old raspberry then to set a NAS server in other to solve my storage problem.

Donald

Thks