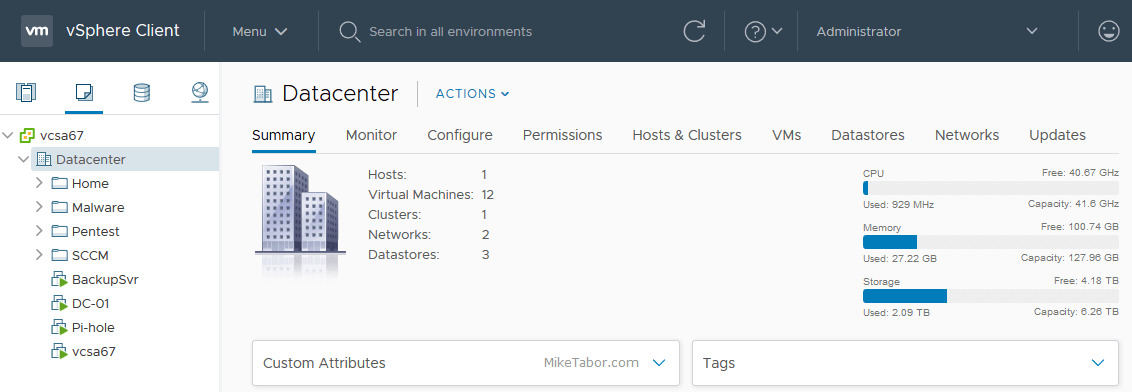

My VMware ESXi Home Lab Upgrade

Although the focus in my career right now is certainly more cloud focused in Amazon Web Services and Azure, I still use my home lab a lot.

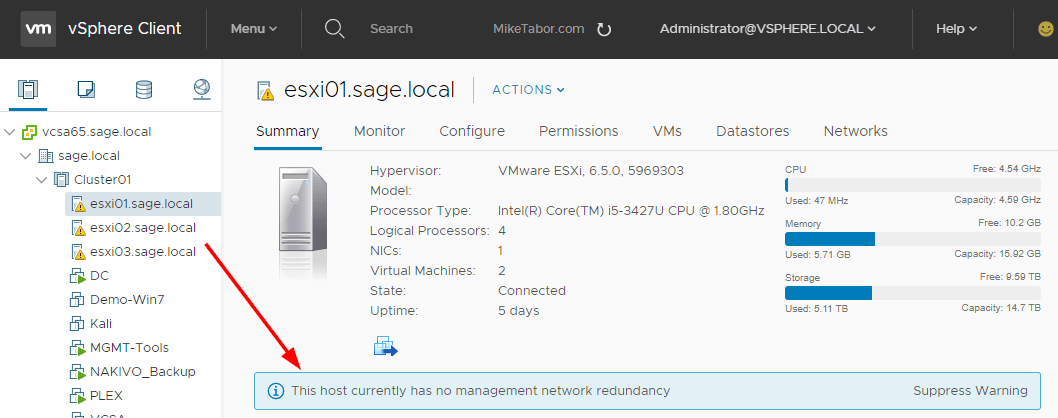

For the last 5+ years my home lab had consisted of using 3x Intel NUC’s (i5 DC53427HYE), a Synology NAS for shared storage and an HP ProCurve switch. This setup served me well for most of those years. It has allowed me to get many of the certifications I have, progress in my career and have fun as well.

At the start of this year I decided it was time to give the home lab an overhaul. At first I looked at the newest generation of Intel NUC’s but really wasn’t looking forward to dropping over $1,300 on just partial compute (I’d still need to be RAM for each of the 3 NUC’s). I also wanted something that just worked, no more fooling around with network adapter drivers or doing this tweak or that tweak.

I also no longer needed to be concerned about something that had a tiny footprint. I also questioned if I really needed multiple physical ESXi hosts. My home lab isn’t running anything mission critical and if I really wanted I could always build additional nested VMware ESXi hosts on one powerful machine if I needed.

So in the end, the below is what I settled on. Replacing all of my compute, most of my networking and adding more storage!

Compute

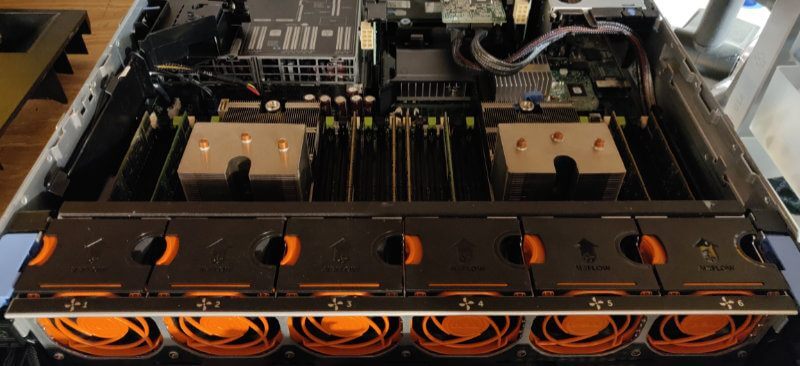

Dell R720 2u server

2x Xeon E5-2650 v2 @ 2.60Ghz (16 cores / 32 threads total)

8 x 16GB PC3-12800R Samsung RAM (128GB total)

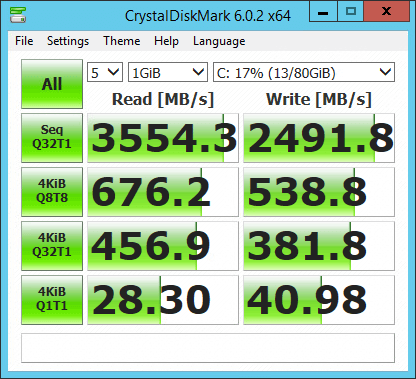

Samsung 970 EVO 1TB M.2 SSD (primary lab storage)

Samsung 860 EVO 500GB SATA SSD (secondary lab storage)

Generic PCIe to M.2 NVMe adapter for 970 EVO

PERC H710P RAID adapter with 1GB cache and BBU

iDRAC Enterprise

So while the old Intel NUC machines served me well for the last several years. They certainly don’t compare to the power of this new server. A single Xeon E5-2650 v2 CPU a far better benchmark compared to all three of the NUC’s combined and this server has dual CPU’s. CPU is certainly no a concern.

Going from having 48GB memory total to now 128GB of RAM I am no longer powering off some machines to power up others – at least not at the moment. ;)

Thanks to the new local storage, virtual machines boot up nearly instantly. The Samsung 970 EVO is INSANE!

Storage

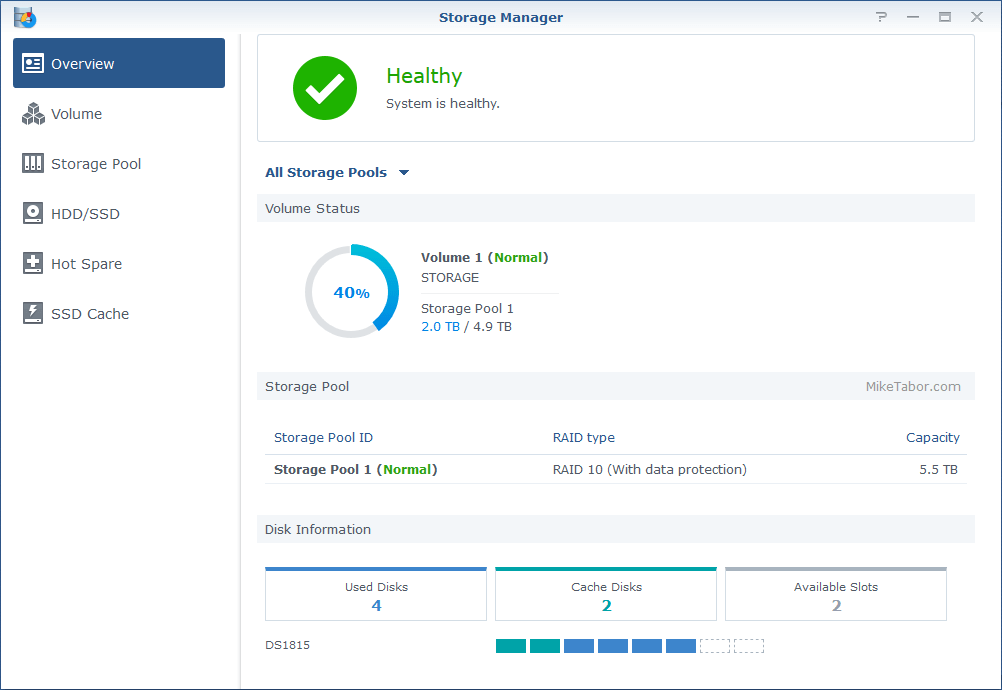

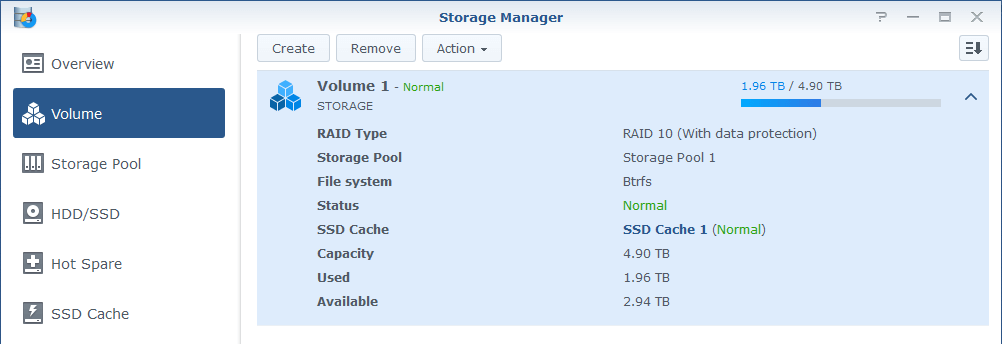

Synology DS1815+ with 16GB RAM

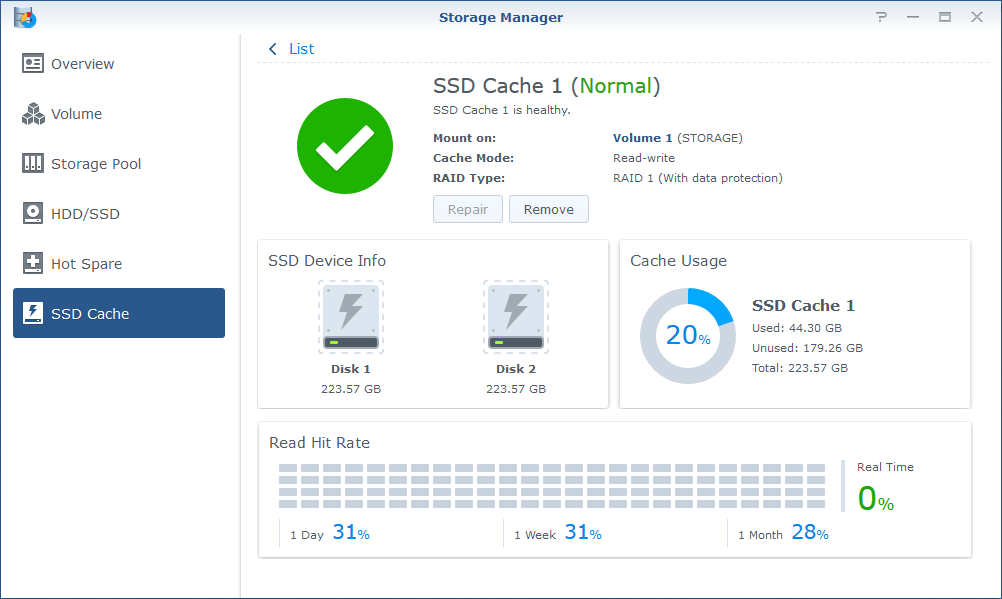

4x Seagate ST3000VN000-1 3TB HDD (RAID-10)

2x SanDisk Ultra II 240GB SSD (RAID-1 Read/Write Cache)

The Synology NAS is now used to store personal documents and photo’s that are sync’ed to my multiple devices using Synology Cloud Station Drive or DS Photo – I really like that pictures from my phone are automatically sent to my Synology.

It also stores my movies for my Plex media server and serves as a local backup repository.

Network

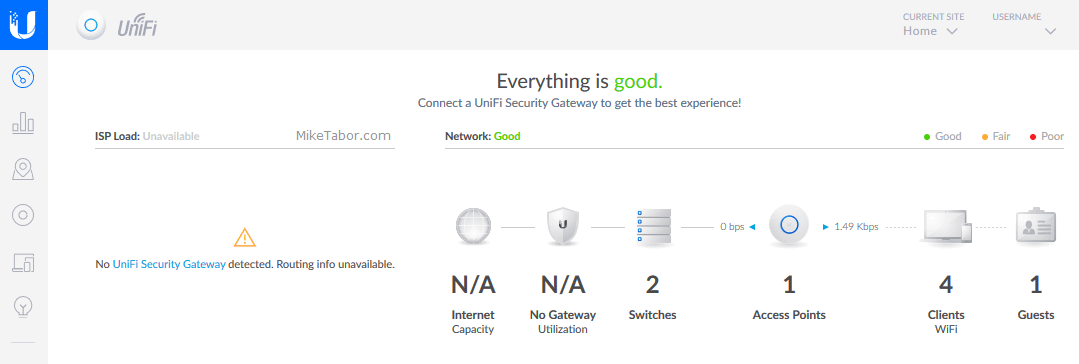

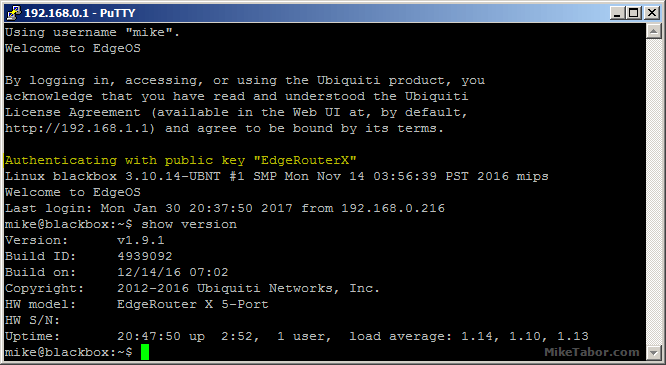

Ubiquiti EdgeRouter X

Ubiquiti UniFi 24 port switch

Ubiquiti UniFi 8 port POE switch

Ubiquiti UniFi UAP-AC-LR

All of the Ubiquiti UniFi network gear is all managed by an Ubiquiti Controller hosted on a Linode VPS.

The network has been broken up into the following VLANs:

- VLAN 1 – Main network where my PC, vCenter, PLEX, and so on reside.

- VLAN 10 – Guest Wifi Network.

- VLAN 20 – Internet of Things for my thermostat and Amazon Dot (which is never use).

- VLAN 30 – Lab network for machines I don’t want necessarily on the main network.

- VLAN 40 – Pentest network for the penetration testing VM’s.

Yeah I know, I still have some “N/A” icons. Maybe one of these days I’ll upgrade to a Ubiquiti Security Gateway. Right now the EdgeRouter X is working just fine.

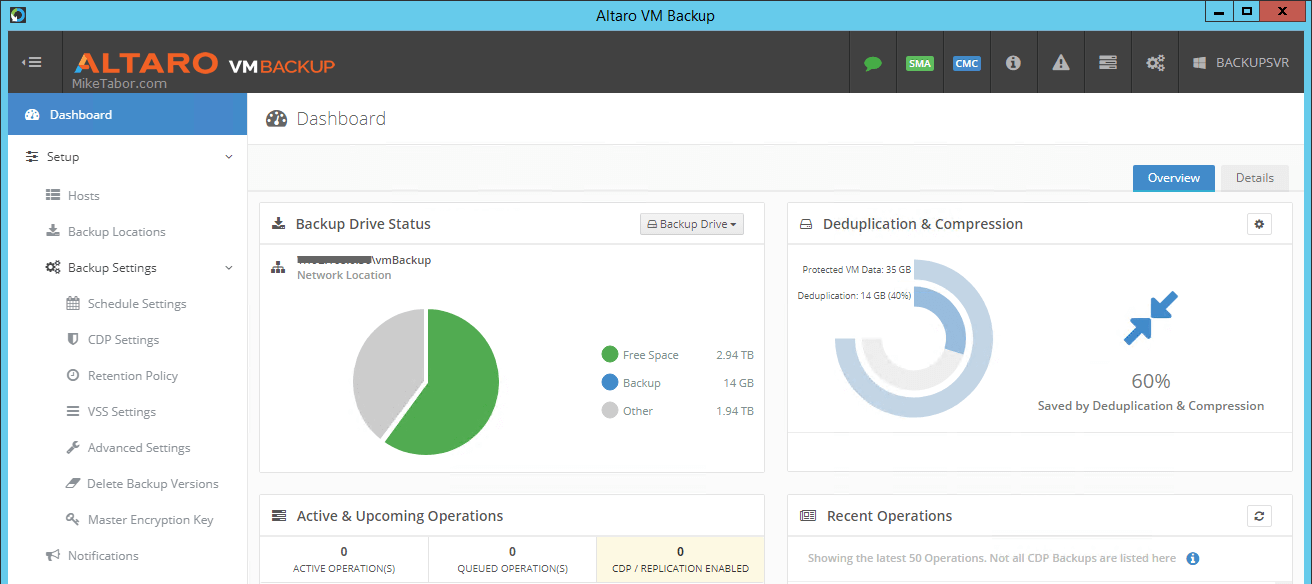

Backups

To backup the virtual machines in the lab I’m using Altaro VM Backup, which backs up the VM’s stored on the Samsung SSD’s to a folder on the Synology NAS. Altaro offers a free NFR license to vExperts as well as a free (2VM per host) license to everyone. You can download it here.

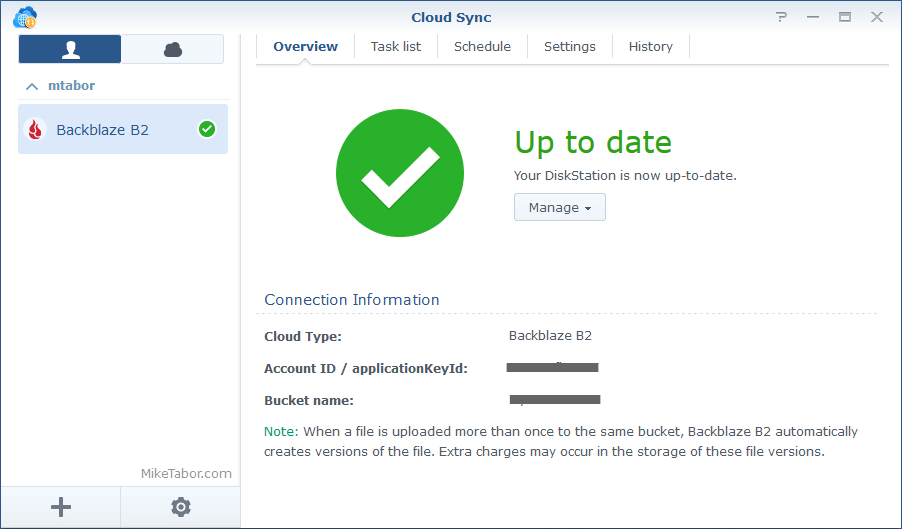

Synology Cloud Sync is used to backup various folders on the NAS to Backblaze B2 which is super inexpensive. Just over 400GB is being backed up to Backblaze for less than $2.00 a month.

The End

I believe that pretty well sums up the new ESXi home lab.

If you have any questions or want more details around something please leave a comment below or Tweet me at @tabor_mike and I’ll be happy to talk.

I have a very similar setup. I use a full Ubiquiti setup as well as a 3 node Proxmox cluster ( 3 8 core xenon desktops with 32GB each) with a Synology DS1518+ with two expansion bays as the datastore. It works out surprisingly well!

I currently use crashplan to backup my Synology but will have to look into Backblaze since it runs natively on the Synology.

Ron I think you’ll like Backblaze. It’s been very reliable for me and for my amount of storage needed has saved me money. It’s also very easy to schedule when the sync happens within the Synology UI.

Van i have more Details? How do you Store it in 19“?

I’ll be happy to share more details! I thought about putting this in a small rack or even building my own rack. I even went so far as bought the rails to do so but decided not to do either. Instead the server is sitting on a shelf out in my garage.

The garage is insulated and even has a small air conditioner. Obviously not as nice or steady as an actual server room, but should do just fine for me home lab needs.

Looks like a small production setup to me except for those samsung ssd’s. Am I wrong?

Yes, very much so.

Nice information. Thanks for sharing

You are very welcome.

Why two switches if everything is internal on a single host? Keep all of the routing internal and use NSX?

Kasey, I am using two switches due to the location of the server. My main switch and all the hardwired runs go to one part of the house and the server and a couple additional IP cameras run into the garage. Thus the smaller switch out there. The two switches are connected via a Cat6 run.

On the VMware compatibility site I’m seeing the R720 only supports up to ESX 6.5U2. Are you running 6.7U2 (latest at this time) without issues on the R720? I know servers typically work for quite a while as I just now got stopped out with my DL360 G5 on 6.5 and couldn’t go to 6.7. In looking at my own lab upgraded I wanted to make sure I hopefully had some longevity to continue to run the latest ESX versions.

Brian, the VMware compatibility is showing what has been certified compatible. The R720 is an older unit and Dell likely has no intentions in getting it certified.

That said, I had zero issues with installing and using ESXi 6.7 U1 on my Dell R720. One thing to keep in mind is the CPU requirements now in ESXi 6.7. In fact I went with an R720 for several reasons, one of which because most of them come with CPU’s that are allowed per the 6.7 release notes. See here for a list of no longer supported CPUs – https://docs.vmware.com/en/VMware-vSphere/6.7/rn/vsphere-esxi-vcenter-server-67-release-notes.html#compatibility

Hope this helps!

-Michael

Mike,

I have been looking for R720. My only concern is the power consumption and noise. How much would it cost per month if R720 is on 24/7 ?

Appreciate if you could provide the feedback.

Thanks,

Prab, cost is going to depend on how much you pay per kilowatt in your area. When the R720 was connected to my kill-o-watt meter it was averaging around 130 watts. For my area it’s around $100 a year. This is about a $75 increase, per year, over what I was paying with my 3x Intel NUC’s.

Mike, this is an awesome post. I currently have R710 & I think I need to get rid of this & getting R720 for 6.7 upgrade. however, if I want to have share storage for vmotion purposes, I have to setup a NAS right?

I currently have R710 with freenas running for my share storage but the speed is slow & something it freezes up my vcenter. It bugs the heck out of me. What are your thoughts on share storage?

Kevin,

If you’re running multiple ESXi hosts you’ll want some kind of shared storage for sure. I have a Synology NAS that I could use but for running my home lab VM’s I really don’t use it that much anymore. All my home lab VM’s are all ran locally using the SSD drives in my single R720 server.

-Michael

You’re absolutely correct. At home, I really don’t need multiple hosts. 1 host is enough. I saw that you also have VM for Pentest. What OS or what software do you use for Pentest? I would like to learn that also. Thanks

Kevin,

I run Metasploit, Kali, Parrot, Buscador, and various Windows 7 & 10 VM’s on that network.

-Michael

Mike, I bought the same adapter like your link. But esxi 6.7 doesn’t recognize the adapter & cannot see my SSD. Should I use Dell esxi 6.7 ISO? I bought the WD Blue 3D NAND 2TB PC SSD. Please help

I have Dell R710 & I found out R710 doesn’t support bifurcation so I cannot use m2 adapter card for SSD. I wish I had known this before buying. :(

Well that’s unfortunate. Maybe sell your R710 and get the R720? :)

-Michael

Hi, nice article and thanks for sharing your ideas and thoughts!

Are you aware can ESXi 6.5 or 6.7 somehow natively use the mix of SSD:s / M.2 SSD drives and traditional SAS drives to optimize the IO? Or do you need to configure those as separate datastores on ESX side and “manually” optimize the usage of different disk speeds?

Jouko

Jouko,

Sorry, but I don’t understand what you are asking.

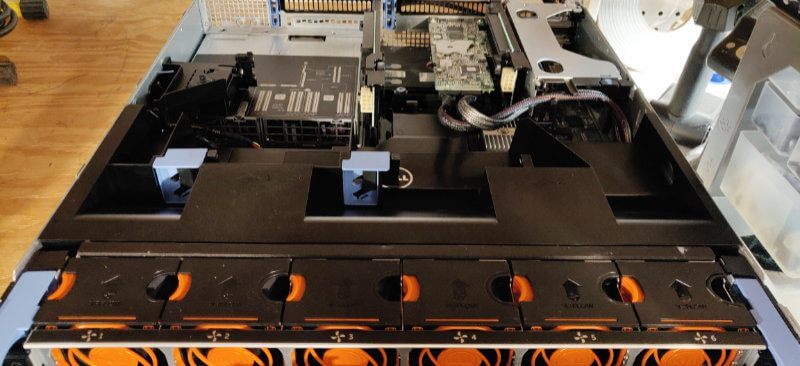

Hi – Interesting post. I see you have the server in the garage. How is the noise of this server?

The server is very quiet without any drives installed. I’ve noticed the fans did seem to speed up just a tiny bit when I added the SATA SSD drive. Even still it’s very manageable where it doesn’t bother me in the least bit when I’m out in the garage.

The garage shares a wall with the living room and at no time have I ever heard the server inside the house. So it’s a win win for me.

-Michael

Mike, can you tell us what you spent and where you bought the stuff? Thanks!!

Mike,

I believe I linked to all or most of the items I purchased – which came from either eBay or Amazon.

Total spend was around $900. That doesn’t include the Synology NAS and it’s drives, nor does it include the RAID controller as I already had this laying around. You can usually pick up the Dell PERC H710P for around $50-100. So including that say just under $1,000 for the server configured above, plus or minus a few bucks.

You could certainly be much less than that foregoing the Samsung 860 SATA SSD and RAID controller if you desired and still have a server with a nice chunk of CPU/MEM resources, along with a sizable and silly fast local storage thanks to the NVMe drive.

-Michael

Hi Mike,

How much do you pay for the VMWare license? Is there such thing as a free version (not trial that expires in 60 days)?

Thanks

Rudy,

As a VMware vExpert (https://vexpert.vmware.com/) I receive a free license, so I don’t pay anything for it.

Another solution, and one I would highly recommend would be the VMUG Advantage program (https://community.vmug.com/vmug2019/membership/vmug-advantage-membership). It costs $200 a year and gives you 365-day licenses to a bunch of VMware software. If you’re a VMUG member you can usually get discounts for the Advantage program to save a few more bucks. Many VMUG’s giveaway a couple VMUG Advantages as well, so it pays to attend any local VMUG meetings if you can.

-Michael

Hi Mike,

great post! I’m considering building my home lab on NUC like system (I5 8300H) and I’m unable to decide whether to go with Samsung 970 EVO PLUS 500 GB (130 euro) or Samsung 860 EVO M.2 500 GB (90 euro) as primary storage for VMs. I’m tight on budget and not sure if speed of the 970 would be justified (do I really need 3500MB/s write?). What would you recommend? Planning on running 5-6 VMs, nothing heavy, just testing various stuff on Windows/Linux platforms, AD, SQL, etc…

Thanks!

Baki,

Honestly either will work just fine. I love my NVMe, it’s silly fast and even looks cool in my Dell server, but in reality I reserve that drive for my mostly used VM’s like my Plex server, a few different jumpboxes. The rest of my last resides on my “slower” SATA SSD.

Now that said, I do recall there being some issues with the Intel NUC’s and ESXi reading the M.2 drives at one time, where you had to inject a driver or two. I’m not sure if that is still an issue or not, but that is something to consider and research.

-Michael

Hi Mike- thank you for sharing your build. What PCI-E NVME adapter are you using? do you happen to have a part number? also, anything special on setting that up in your R720? I just bought an R720 myself, and it has the same RAID card as yours. I believe to even to get it to boot, you need at least two drives in RAID 0 (if I am not mistaken)? (lab noob)

Brad,

The PCIe to M.2 NVMe adapter is just a generic adapter and I don’t recall seeing any names on the card. I have linked to it above.

As for the RAID controller, you do not need two drives to configure the RAID.

-Michael

Hi Mike,

What drive(s) are you using to boot ESXi on the R720?

Frank,

I’m not sure I’m following you. No special drivers are needed to install ESXi on the Dell R720.

-Michael

Hi Mike,

I mean what drive is hosting the OS? You only have the Samsung 970 M.2 and Samsung 860 Sata SSD installed on the server correct?

Frank,

No, those drives are used for local storage. ESXi itself is installed on a 8GB USB thumb drive.

-Michael

Mike, first Thank you for a wonderful guide here and I do know this is old by now. Here is what my story is ever since I found this thread when you made it I wanted to set up a R720 security lab at home I just got around to saving up for it and had a couple questions on this. I have the exact server coming in mail and have a pair of 1-TB Samsung 860 EVO SSD’s, the question is should I run these in mirror as my main tier 2 Datastore or strip them? Plans for tier 1 storage is pcie adapter to NVMe drives, I was wondering if you can share a link to the ones you know for sure work. This will greatly help me. Thank you in advance and appreciate any information you have to share… Almost forgot have you been able to upgrade to ESXI 7 on the R720 I see a lot of talk about a custom VM image DELLEMC version that has some injected drivers. Sorry for my bad English.

Hey Tony,

RAID 0 (stripping) is just fine, so long as you have backups or don’t care about the data in the event of a drive failure, otherwise go with RAID 1 (mirroring). As for the adapter the one linked at the top of the “Compute” section is the only one I have experience with and to this day it’s running just fine for me.

I have not upgraded my ESXi home lab host to ESXi 7 as it’s not in the compatibility matrix and just haven’t put up much effort to finding a custom image or injecting my own drivers. ESXi 6.7 works just fine for me at the moment.

Hope this helps!

-Michael